In the world of AI, large language models (LLMs) like ChatGPT and Claude are typically accessed through cloud-based services. However, for businesses prioritizing data privacy, running LLMs locally is a game-changer. This approach ensures that sensitive data never leaves your device, similar to end-to-end encryption. Let’s explore the top six tools for running LLMs locally, offering privacy, customization, and cost savings.

Why Use Local LLMs?

Running LLMs locally offers several advantages:

- Privacy: Your chat data stays on your device, ensuring no data leaves your local machine.

- Customization: Advanced configurations for CPU threads, temperature, context length, and GPU settings.

- Cost Savings: Free to use with no monthly subscriptions, unlike cloud services that charge per API request.

- Offline Access: Run models without an internet connection.

- Reliability: Avoid connectivity issues associated with cloud services.

Top Six Free Local LLM Tools

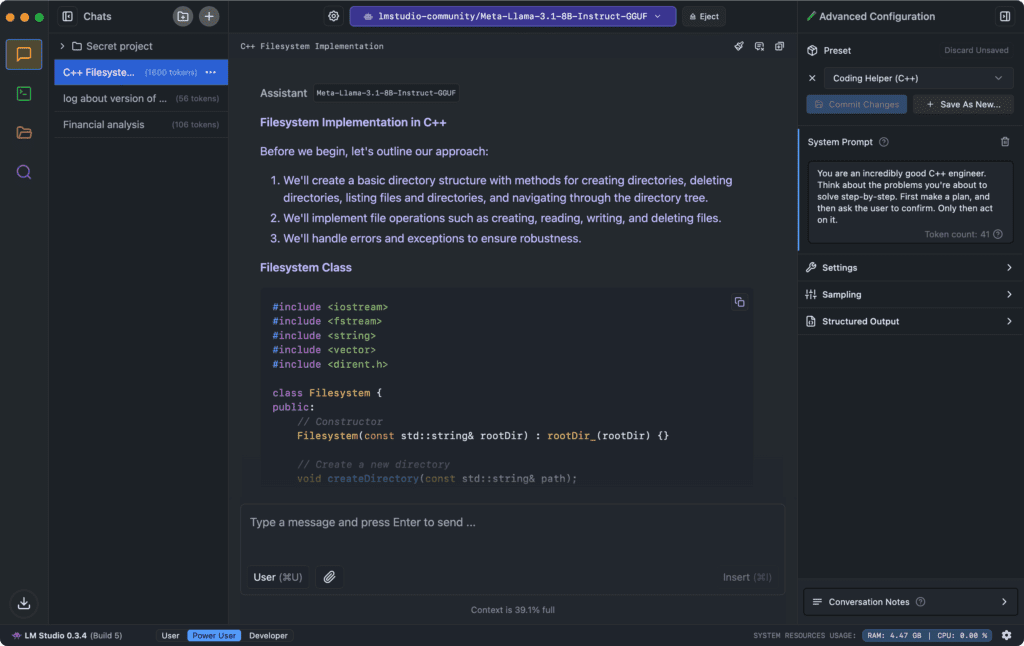

1. LM Studio

LM Studio is a user-friendly tool that supports various model files in the .gguf format from providers like Llama and Mistral.

LM Studio stands out with its user-friendly interface, making it accessible even for those new to running LLMs locally. Its compatibility guess feature ensures that users download models suited to their hardware, preventing wasted time and resources. The ability to set up a local HTTP server mimics the functionality of cloud-based APIs, allowing developers to integrate LLMs into their applications seamlessly. With extensive customization options, LM Studio is ideal for both personal projects and business applications, offering a robust alternative to subscription-based services. It offers a range of features:

- Model Customization: Adjust parameters like temperature and maximum tokens.

- Chat History: Save prompts for future use.

- Cross-Platform: Available on Linux, Mac, and Windows.

- Local Inference Server: Set up a local HTTP server for developers.

LM Studio

LM Studio is free for personal use and offers an intuitive interface with extensive customization options.

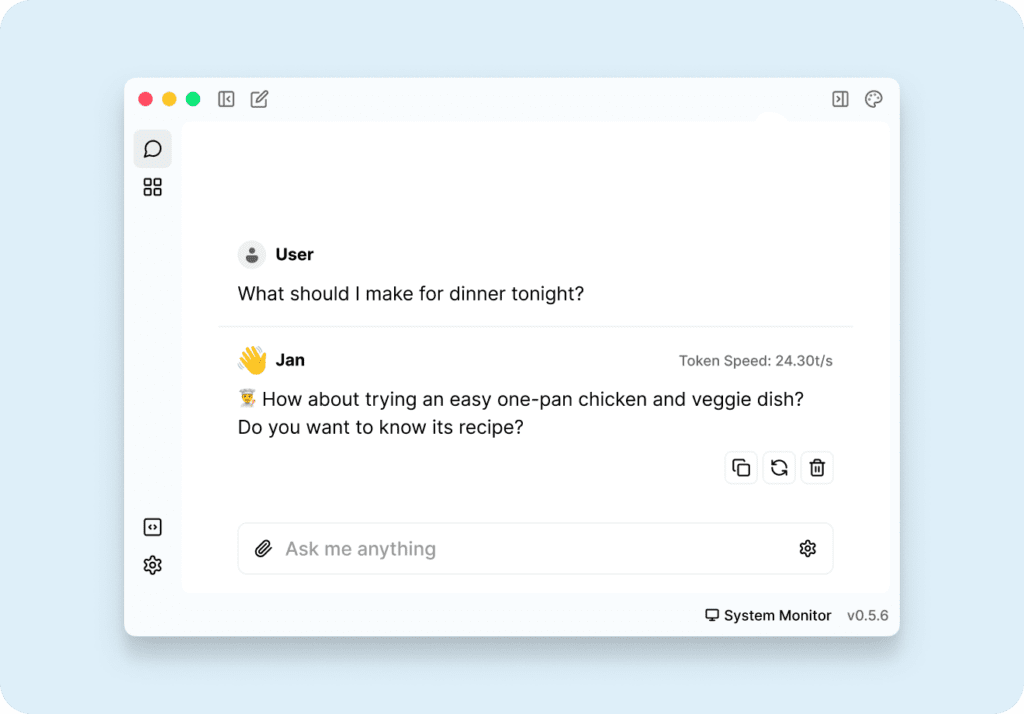

2. Jan

Jan is an open-source alternative to ChatGPT, allowing you to run models like Mistral offline.

Jan’s open-source nature fosters a collaborative environment where developers can contribute and extend its capabilities. Its support for importing models from platforms like Hugging Face provides flexibility in model selection. The inclusion of extensions like TensorRT enhances performance, making Jan suitable for both casual users and developers looking to optimize their AI workflows. With a strong community presence on GitHub and Discord, users can easily find support and share insights, further enriching the tool’s ecosystem. Key features include:

- Local Operation: Run models without an internet connection.

- Ready-to-Use Models: Comes with pre-installed models and supports importing from Hugging Face.

- Customization: Adjust inference parameters locally.

- Extensions: Supports extensions like TensorRT for enhanced performance.

Jan

Jan offers a clean interface and a strong community for support and contributions, with over seventy pre-installed models.

3. Llamafile

Backed by Mozilla, Llamafile converts LLMs into executable files for easy integration into applications. It supports various architectures like Windows, MacOS, and Linux.

Llamafile’s unique approach of converting LLMs into executable files simplifies the integration process, making it accessible even to those without extensive technical knowledge. Its support for various architectures ensures broad compatibility, allowing users to run models on different operating systems and hardware configurations. By focusing on fast CPU inference, Llamafile enables efficient processing even on consumer-grade machines, democratizing access to advanced AI capabilities.

- Executable File: Run LLMs with a single executable file.

- Model Conversion: Convert model files into

.llamafileformat. - Offline Operation: Runs entirely offline for enhanced privacy.

Llamafile

Llamafile democratizes AI by making LLMs accessible to consumer CPUs, with strong community support and fast offline performance.

4. GPT4ALL

GPT4ALL focuses on privacy and security, allowing you to run LLMs on major consumer hardware.

GPT4ALL’s emphasis on privacy and security makes it a preferred choice for users handling sensitive data. Its ability to run entirely offline ensures that data remains secure, while its extensive model library allows users to experiment with various LLMs. The enterprise edition caters to businesses, providing the necessary support and licensing options to integrate AI into professional environments. With a large user base and active community, GPT4ALL continues to evolve, offering new features and improvements based on user feedback. Key features include:

- Privacy First: Keep sensitive data on your device.

- No Internet Required: Works completely offline.

- Models Exploration: Browse and download various LLMs.

- Enterprise Edition: Offers enterprise packages for businesses.

GPT4ALL

GPT4ALL has a large user base and active community, making it a reliable choice for local LLM use with a focus on privacy and security.

5. Ollama

Ollama lets you create local chatbots without API dependencies.

Ollama’s flexibility in model customization and its large collection of available models make it a versatile tool for developers. Its seamless integration with various applications, including mobile platforms, expands its usability across different devices. The active contributor base on GitHub ensures continuous updates and enhancements, keeping Ollama at the forefront of local LLM tools. With support for importing models from PyTorch, Ollama offers a comprehensive solution for developers looking to leverage existing models in their projects. It offers a range of features:

- Model Customization: Convert and run

.ggufmodel files. - Model Library: Large collection of models to try.

- Community Integrations: Seamless integration with web and desktop applications.

Ollama

Ollama has a large number of contributors and is highly extendable, offering seamless integration with various applications.

6. LLaMa.cpp

LLaMa.cpp is the backend technology powering many local LLM tools. It supports significant LLM inferences with minimal configuration.

LLaMa.cpp’s minimal setup and high performance make it a powerful backend for running LLMs locally. Its support for a wide range of models and hardware configurations ensures that developers can optimize their AI applications for various environments. The ability to run both locally and in the cloud provides flexibility, catering to different deployment scenarios. With a focus on performance and ease of use, LLaMa.cpp serves as a reliable foundation for developers building AI-powered solutions.

- Performance: Excellent local performance on various hardware.

- Supported Models: Supports popular LLMs like Mistral and Falcon.

- Frontend AI Tools: Supports open-source LLM UI tools.

LLaMa.cpp

LLaMa.cpp offers a minimal setup with high performance, making it a robust choice for developers with support for various hardware.

Conclusion

Running LLMs locally offers numerous benefits, including enhanced privacy, customization, and cost savings. Tools like LM Studio, Jan, Llamafile, GPT4ALL, Ollama, and LLaMa.cpp provide powerful options for developers to experiment with LLMs without relying on cloud services. Whether you’re a developer looking to fine-tune models or a business prioritizing data privacy, these tools offer a range of features to suit your needs.

Founder of ToolsLib, Designer, Web and Cybersecurity Expert.

Passionate about software development and crafting elegant, user-friendly designs.

Stay Updated with ToolsLib! 🚀

Join our community to receive the latest cybersecurity tips, software updates, and exclusive insights straight to your inbox!